The transition from a high-performing algorithm in a laboratory setting to a robust, operational asset is the defining challenge of applied artificial intelligence. Many promising models falter at this stage, not due to algorithmic flaws, but because of the immense engineering complexity involved.

At AI-Innovate, we specialize in bridging this gap, transforming theoretical potential into practical, industrial-grade solutions. This article provides a technical blueprint, moving beyond simplistic narratives to dissect the core engineering disciplines required to successfully implement and sustain Machine Learning in Production.

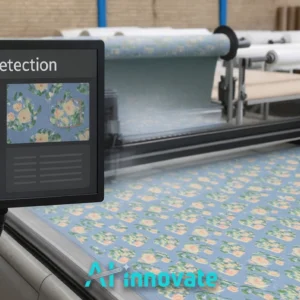

AI-Powered QA You Can Trust

Fast, reliable, and scalable assurance across production lines.

Beyond the Algorithm

The siren call of high accuracy scores often creates a misleading focal point in machine learning projects. While a precise model is a prerequisite, it represents a mere fraction of a successful production system.

The reality is that the surrounding infrastructure—the data pipelines, deployment mechanisms, monitoring tools, and automation scripts—constitutes the vast majority of the work and is the true determinant of a project’s long-term value and reliability.

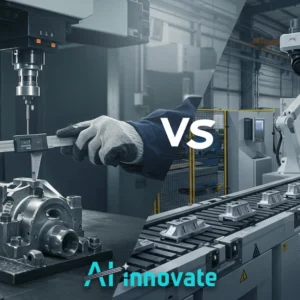

The focus must shift from merely building models to engineering holistic, end-to-end systems. This distinction crystallizes into two competing viewpoints:

- Model-Centric View: Success is measured by model accuracy on a static test dataset. The model is treated as the final artifact.

- System-Centric View: Success is measured by the overall system’s impact on business goals (e.g., reduced waste, increased efficiency). The model is treated as one dynamic component within a larger, interconnected system.

Forging the Data Foundry

At the heart of any resilient ML system lies its data infrastructure—a veritable “foundry” where raw information is processed into a refined, reliable asset. The quality of this raw material directly dictates the quality of the final product.

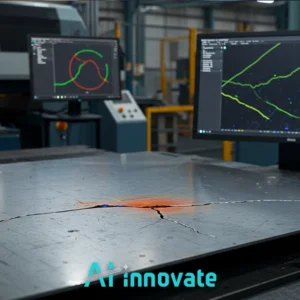

Neglecting this foundation introduces instability and unpredictability, rendering even the most sophisticated algorithm useless. An industrial-grade approach to data management hinges on three core pillars, which are crucial for applications ranging from finance to specialized tasks like metal defect detection.

Data Integrity Pipelines

These are automated workflows designed to ingest, clean, transform, and validate data before it ever reaches the model. This includes schema checks, outlier detection, and statistical validation to ensure that the data fed into the training and inference processes is consistent and clean, preventing garbage-in-garbage-out scenarios.

Immutable Data Versioning

Just as code is version-controlled, so too must be data. Using tools to version datasets ensures that every experiment and every model training run is fully reproducible. This traceability is non-negotiable for debugging, auditing, and understanding how changes in data impact model behavior over time.

Proactive Quality Monitoring

Production data is not static; it drifts. Proactive monitoring involves continuously tracking the statistical properties of incoming data to detect “data drift” or “concept drift”—subtle shifts that can degrade model performance. Automated alerts for such deviations enable teams to intervene before they impact business outcomes.

Bridging Code and Reality to Machine Learning in Production

Transforming a functional piece of code from a developer’s machine into a scalable, live service is a significant engineering hurdle. This process is the bridge between the controlled environment of development and the dynamic, unpredictable nature of the real world.

A failure to construct this bridge methodically leads to fragile, unmaintainable systems. The engineering discipline required to achieve this Machine Learning in Production rests on several key practices:

- CI/CD Automation: Continuous Integration and Continuous Deployment (CI/CD) pipelines automate the building, testing, and deployment of ML systems. Every code change automatically triggers a series of validation steps, ensuring that only reliable code is pushed to production, drastically reducing manual errors and increasing deployment velocity.

- Containerization: Tools like Docker are used to package the application, its dependencies, and its configurations into a single, isolated “container.” This guarantees that the system runs identically, regardless of the environment, eliminating the “it works on my machine” problem.

- Orchestration: As demand fluctuates, the system must scale accordingly. Orchestration platforms like Kubernetes automate the management of these containers, handling scaling, load balancing, and self-healing to ensure the service remains highly available and performant.

Operational Vigilance

Deployment is not a finish line; it is the starting gun for continuous operational oversight. A model in production is a living entity that requires constant attention to ensure it performs as expected and delivers consistent value.

This “operational vigilance” is a data-driven process that safeguards the system against degradation and unforeseen issues. Effective monitoring requires a dashboard of vital signs to ensure the system, whether it’s used for financial predictions or real-time defect analysis, remains healthy.

- Performance Metrics: Tracking technical metrics like request latency, throughput, and error rates is essential for gauging the system’s operational health and user experience.

- Model Drift and Decay: This involves monitoring the model’s predictive accuracy over time. A decline in performance (decay) often signals that the model is no longer aligned with the current data distribution (drift) and needs to be retrained.

- Resource Utilization: Monitoring CPU, memory, and disk usage is critical for managing operational costs and ensuring the infrastructure is scaled appropriately to handle the workload without waste.

Thinking in Systems

A model, no matter how accurate, does not operate in a vacuum. It is a component embedded within a larger network of business processes, user interfaces, and human workflows. The ultimate value of any AI implementation is realized only when it is seamlessly integrated with these other components to achieve a broader system goal.

As system thinker Donella Meadows defined it, a system is “a set of inter-related components that work together in a particular environment to perform whatever functions are required to achieve the system’s objective.”

For an industrial leader, this means understanding that a model for machine learning for manufacturing process optimization is not just a predictive tool; it is an engine that directly impacts inventory management, supply chain logistics, and overall plant efficiency. The success of Machine Learning in Production is therefore a measure of its harmonious integration into the business ecosystem.

Accelerating Applied Intelligence

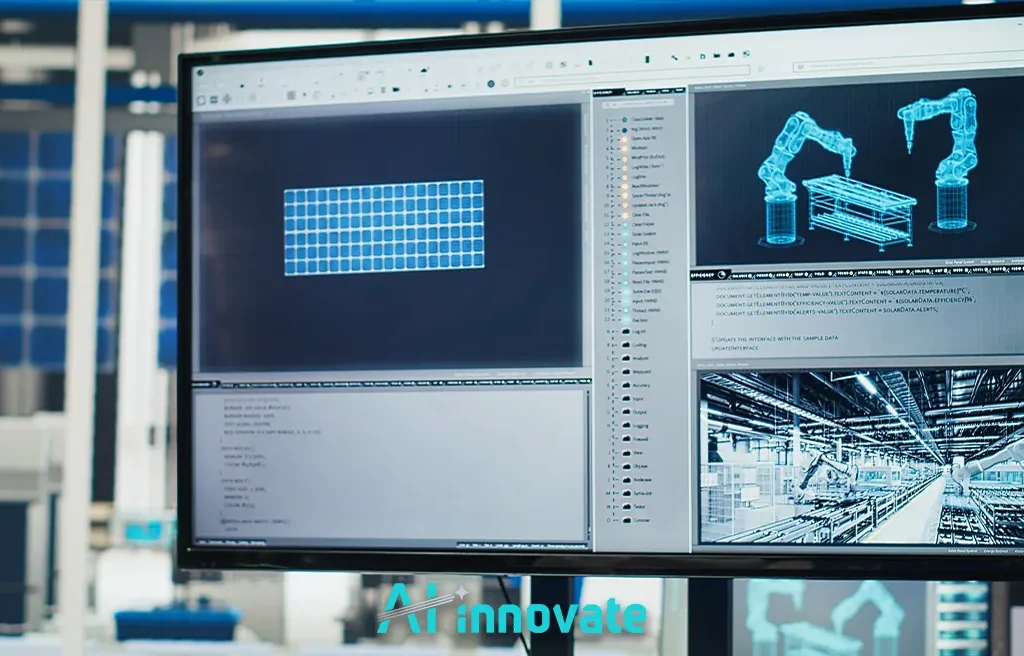

Navigating this complex landscape requires more than just best practices; it demands specialized, purpose-built tools that streamline development and deployment. This is where AI-Innovate provides a distinct advantage, offering practical solutions that address the specific pain points of both industrial leaders and technical innovators. Our focus is to make sophisticated Machine Learning in Production both accessible and effective.

For Industrial Leaders

Your goal is clear: reduce costs, minimize waste, and guarantee quality. Our AI2Eye system is engineered precisely for this. It goes beyond simple defect detection to provide an integrated platform for process optimization.

By identifying inefficiencies on the production line in real-time—from fabric defect detection to identifying microscopic flaws in polymers—AI2Eye delivers a tangible ROI by transforming your quality control from a cost center into a driver of efficiency.

Read Also: Machine Learning in Quality Control – Smarter Inspections

For Technical Innovators

Your challenge is to innovate faster, unconstrained by hardware limitations and lengthy procurement cycles. Our AI2Cam is a powerful camera emulator that liberates your R&D process.

By simulating a vast array of industrial cameras and environmental conditions directly on your computer, AI2Cam allows you to prototype, test, and validate machine vision applications at a fraction of the time and cost. It accelerates your development lifecycle, enabling you and your team to focus on innovation, not on hardware logistics.

Applied Intelligence in Action: Real-World Use Cases and Industry Examples

The true measure of machine learning in production is not in laboratory benchmarks, but in the tangible, sustained value it delivers across industries. When deployed with the right infrastructure and operational vigilance, models become embedded engines of transformation—optimizing processes, reducing waste, and enabling decisions at unprecedented speed and scale. Below are examples that illustrate the diverse impact of machine learning in production:

- Predictive Maintenance: Anticipating equipment failures before they occur allows factories to schedule interventions strategically, reducing downtime and extending asset lifespans. Sensors feed real-time data into models that detect early warning patterns invisible to human inspection.

- Energy Optimization: Intelligent control systems dynamically adjust power usage in manufacturing plants, data centers, or logistics hubs—balancing output with consumption. This minimizes costs while supporting sustainability goals.

- Quality Assurance at Scale: High-resolution imaging paired with computer vision models can identify microscopic defects in materials or products instantly, ensuring consistent quality without slowing production lines.

- Supply Chain Forecasting: By analyzing historical sales, market signals, and supplier data, predictive models improve demand planning, optimize inventory, and mitigate bottlenecks before they ripple through operations.

- Process Automation in Logistics: Autonomous decision systems route shipments, allocate warehouse space, and prioritize tasks in real time, adapting to sudden changes in demand or supply constraints.

Each of these examples underscores the shift from isolated prototypes to integrated, business-critical systems. The enduring success of machine learning in production lies in its seamless fusion with operational realities, delivering measurable outcomes that matter most to the enterprise.

Designing for Trust and Resilience

A truly production-grade system must not only perform; it must be dependable, equitable, and resilient. Trust is built on transparency and fairness, while resilience is the ability of the system to handle unexpected inputs and inevitable model errors gracefully.

This advanced stage of Machine Learning in Production moves beyond functionality to focus on responsibility and robustness, ensuring the system can be relied upon in critical applications. Building this requires a deliberate focus on several key engineering principles:

- Implement Robust Fail-safes: Design the system with non-ML backup mechanisms that can take over or trigger an alert if the model’s predictions are out of bounds or its confidence is too low.

- Audit for Bias: Proactively test the model for performance disparities across different data segments to identify and mitigate potential biases that could lead to unfair or inequitable outcomes.

- Ensure Operational Transparency: Maintain comprehensive logs and implement interpretability techniques that allow stakeholders to understand why a model made a particular decision, especially in cases of failure.

Conclusion

The journey from a theoretical algorithm to a valuable business asset is an engineering discipline, not merely a data science exercise. It demands a holistic, system-level perspective that encompasses robust data infrastructure, automated deployment, and continuous operational vigilance. The success of Machine Learning in Production is ultimately measured by its ability to deliver reliable, scalable, and trustworthy value within a real-world context. This requires a fusion of deep technical expertise and strategic vision—a fusion we are dedicated to delivering at AI-Innovate.