The modern textile industry operates on a challenging premise: delivering flawless products at a scale and speed that often exceeds human capability for quality assurance. This creates a critical vulnerability where minor material flaws can lead to significant financial loss and brand erosion.

At AI-Innovate, we bridge this gap by engineering intelligent, practical software that addresses these real-world industrial challenges head-on. This article provides a data-driven technical analysis of automated Textile Defect Detection, moving from foundational concepts and performance benchmarks to global integration strategies and the tools that accelerate development in this transformative field.

Quantifying the Manual Inspection Bottleneck

Before embracing automation, it is crucial to understand the clear, quantifiable limitations of manual inspection. The reliance on human operators introduces inherent variability and a ceiling on efficiency that advanced manufacturing cannot afford.

Decades of practice show that while expertise is valuable, it cannot overcome fundamental human constraints in speed, endurance, and perceptual accuracy. To truly appreciate the shift towards automation, it is essential to examine the tangible data points that define this bottleneck:

- Speed Limitation: A human inspector’s focus wanes significantly after 20-30 minutes, capping effective inspection speeds at a maximum of 20-30 meters of fabric per minute.

- Accuracy Decay: While the theoretical maximum detection rate for manual inspection is around 90%, real-world performance in factories often drops to an average of 65% due to fatigue and environmental factors.

- Waste Generation: On an industrial scale, these inefficiencies contribute to staggering waste. The textile industry generates roughly 92 million tons of waste annually, with an estimated 25% of it occurring during the production phase alone, much of it related to undetected or late-detected defects.

Read Also: Defect Detection in Manufacturing – AI-Powered Quality

Machine Vision in Micro-Defect Analysis

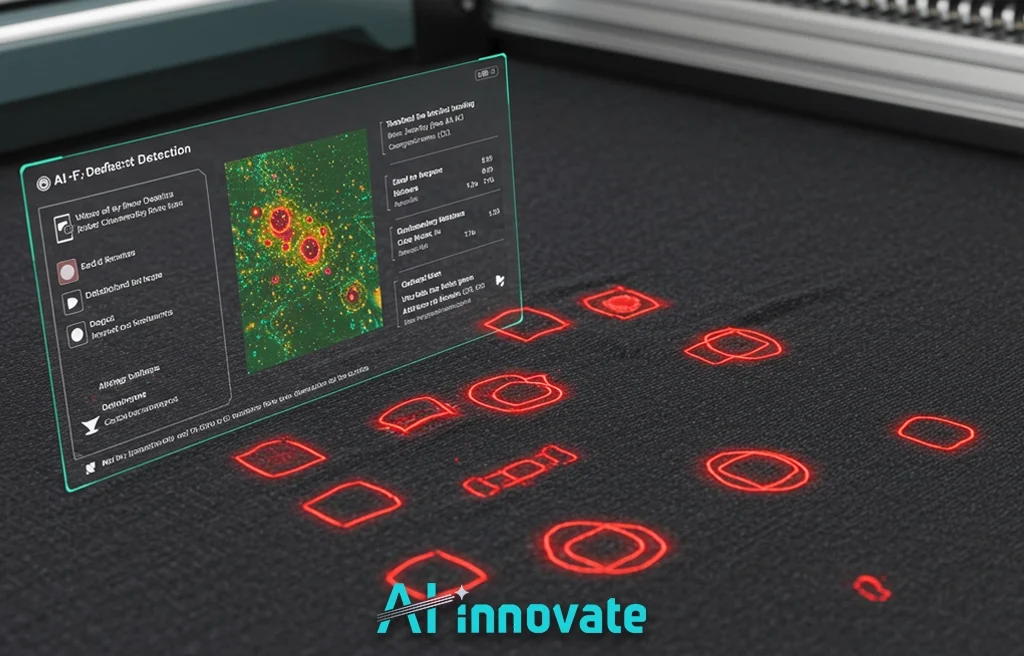

Automated systems transcend human limitations by leveraging machine vision for defect detection, a field that combines high-fidelity imaging with sophisticated analytical models. These systems don’t just mimic human sight; they enhance it to a microscopic level of precision, operating tirelessly at speeds that match modern production lines. The power of this technology stems from two key advancements that work in concert:

High-Resolution Imaging Technologies

The process begins with capturing a perfect digital replica of the fabric. Systems often employ high-resolution industrial cameras—some using sensors as powerful as 50 megapixels—to scan the entire width of the material as it moves.

Paired with controlled, high-intensity lighting, this setup captures minute details, creating a data-rich image that serves as the foundation for analysis. This process ensures that even the most subtle variations are visible to the AI.

The Role of Convolutional Neural Networks

Once an image is captured, the analytical heavy lifting is performed by deep learning models, most notably Convolutional Neural Networks (CNNs). Models like YOLO (You Only Look Once) and custom-architected CNNs are trained on vast datasets containing thousands of examples of both flawless and defective fabric.

They learn to identify complex patterns, including subtle defects like knots, fine lines, small stains, loose threads, and color inconsistencies that are often imperceptible to the human eye, making robust textile defect detection a reality.

Benchmarking Detection Model Performance

The theoretical promise of AI is validated by measurable performance benchmarks from various real-world and experimental models. For QA Managers and Operations Directors, these metrics provide the tangible evidence needed to justify investment, demonstrating a clear and reliable return.

For technical teams, they offer a baseline for what is achievable. The data below, gathered from multiple studies, highlights the efficacy of different models on specific datasets.

| Model Name | Accuracy / Performance Metric | Defect Types / Dataset Context |

| AlexNet (Pre-trained) | 92.60% Max Accuracy | General classification of textile defects in simulations. |

| YOLOv8n | 84.8% mAP (mean Average Precision) | 7 defect classes on data from an active textile mill. |

| DetectNet (Pre-trained) | 93% and 96% Accuracy (two models) | Distinguishing between defective and non-defective fabric. |

| Custom VGG-16 | 73.91% Accuracy | Defects on pattern, textured, and plain fabrics. |

| “Wise Eye” System | >90% Detection Rate | Over 40 common types of fabric defects in lace. |

Global Perspectives on System Integration

The adoption of automated inspection is not a localized trend but a global industrial movement, with distinct initiatives and success stories emerging worldwide. This widespread implementation underscores the technology’s maturity and its role as a new standard for quality control in competitive markets. The following examples showcase how different regions are leveraging this technology.

Success Stories from Asia

In China, integrated systems like “Wise Eye” are already making a significant impact. Capable of identifying over 40 common fabric defects with a detection rate exceeding 90%, this system has been shown to boost production capacity by 50% in lace factories by improving the inspection accuracy from the manual rate of 65% to an automated rate of 91.7%. This demonstrates a fully-realized solution deployed at scale.

European Industrial Initiatives

In Europe, the focus extends to both implementation and strategic enablement. Germany’s government has launched initiatives like “Mittelstand 4.0 Kompetenzzentrum Textil vernetzt” to help small and medium-sized textile enterprises adopt digitalization and AI to remain competitive.

Simultaneously, research consortia are driving innovation. A project by Eurecat and Canmartex in Spain uses photonics and AI not just for detection but for prediction, aiming to reduce manufacturing flaws by over 50%, directly addressing waste and sustainability. This highlights a mature understanding of AI as a tool for proactive process optimization. This is a core part of advanced textile defect detection.

Read Also: Fabric Defect Detection Using Image Processing

Accelerating Vision System Prototyping

For Machine Learning Engineers and R&D Specialists, a primary obstacle to innovation is the reliance on physical hardware. The process of acquiring, setting up, and testing with expensive industrial cameras creates significant delays and budget constraints.

This hardware-dependent cycle limits the ability to experiment with different setups and rapidly iterate on new models. At AI-Innovate, we recognize that true agility comes from decoupling software development from physical hardware constraints.

The solution lies in robust simulation. By using a “virtual camera” or camera emulator, development teams can test their vision applications in a purely software-based environment. This approach unlocks several key advantages for development teams:

- Accelerated Development: Test ideas and validate software in hours, not weeks.

- Reduced Costs: Eliminate the need for expensive upfront hardware investment for prototyping.

- Enhanced Flexibility: Simulate a wide range of camera models, lighting conditions, and defect scenarios that would be impractical to replicate physically.

- Seamless Collaboration: Enable remote teams to work on the same project without needing to share physical equipment.

From Insight to Industrial Application

Understanding the data, the technology, and the global trends is the first step. The next is translating that knowledge into a reliable, high-performance system on your own factory floor. This requires a partner with deep expertise in both industrial processes and applied artificial intelligence.

Our solutions are designed to turn these insights into action. For Operations Directors, our AI2Eye system delivers a complete, real-time quality control solution that reduces waste and boosts efficiency.

For R&D specialists, our AI2Cam virtual camera emulator empowers your team to innovate faster and more affordably. Contact our experts to discover how we can tailor these tools to your specific operational needs.

Conclusion

Automating quality control is no longer a futuristic concept but a present-day competitive necessity for the textile industry. By moving beyond the inherent limitations of manual inspection, manufacturers can achieve unparalleled levels of efficiency, quality, and waste reduction. A well-implemented strategy for Textile Defect Detection is a direct investment in brand reputation and operational excellence.