The vision of the fully autonomous ‘smart factory’ rests upon a single, foundational capability: a system’s capacity for precise self-awareness and self-correction. This intelligent oversight is the bedrock of future industrial efficiency and resilience, moving operations from reactive to predictive. .

AI-Innovate is dedicated to building this future, developing the practical AI tools that turn this vision into an operational reality for our clients. This article serves as a technical blueprint for this core function, dissecting the key methodologies and real-world applications that power the intelligent factory.

Defining Industrial Anomalies

An industrial anomaly is not merely any variation; it is a specific, unexpected event or pattern that deviates significantly from the established normal behavior of a manufacturing process. This distinction is critical.

While normal process variation is an inherent part of any operation, anomalies—be they point anomalies (a single outlier data point, like a sudden pressure spike), contextual anomalies (a reading that is normal in one context but not another), or collective anomalies (a series of seemingly normal data points that are anomalous as a group)—often signal underlying issues like equipment malfunction or quality degradation.

Traditional Statistical Process Control (SPC) methods, with their reliance on predefined, static thresholds, frequently fall short in today’s dynamic environments. They lack the adaptability to understand complex, multi-variable processes, making a more intelligent approach to Anomaly Detection in Manufacturing not just beneficial, but necessary for competitive survival.

Core Detection Methodologies

Identifying these critical deviations requires a robust set of technical approaches that have evolved significantly. While each serves a distinct purpose, they collectively form a powerful toolkit for engineers and data scientists. Understanding these core methodologies is the first step toward building a resilient production environment. The main categories are:

Supervised & Unsupervised Learning

Supervised methods are highly effective when historical data is well-labeled, allowing the model to be trained on known examples of both normal and anomalous behavior. However, the most dangerous anomalies are often the ones never seen before.

This is where unsupervised learning excels. By learning the intricate patterns of normal operation, these algorithms can flag any deviation from that learned state as a potential anomaly, making them indispensable for discovering novel failure modes.

Semi-Supervised Approaches

This hybrid method offers a practical middle ground, ideal for scenarios where only data from normal operations is abundant and reliable for training. The model builds a strict definition of normalcy and flags anything outside those boundaries.

The Power of Deep Learning

For processing the high-dimensional and complex data streams common in modern factories, such as machine vision feeds or multi-sensor arrays, deep learning models like Autoencoders are transformative. They can learn sophisticated data representations and identify subtle, non-linear patterns that are invisible to traditional statistical methods.

The Data Ecosystem for Intelligent Detection

The sophistication of any anomaly detection model is fundamentally determined by the quality and diversity of the data it consumes. Effective systems do not rely on a single data stream; they integrate a rich ecosystem of information to build a comprehensive understanding of the operational reality. This data ecosystem typically includes several core types:

Time-Series Sensor Data

This is the lifeblood of predictive maintenance and process monitoring. High-frequency data from sensors measuring temperature, pressure, vibration, and flow rates provide a granular, real-time view of machinery health and process stability.

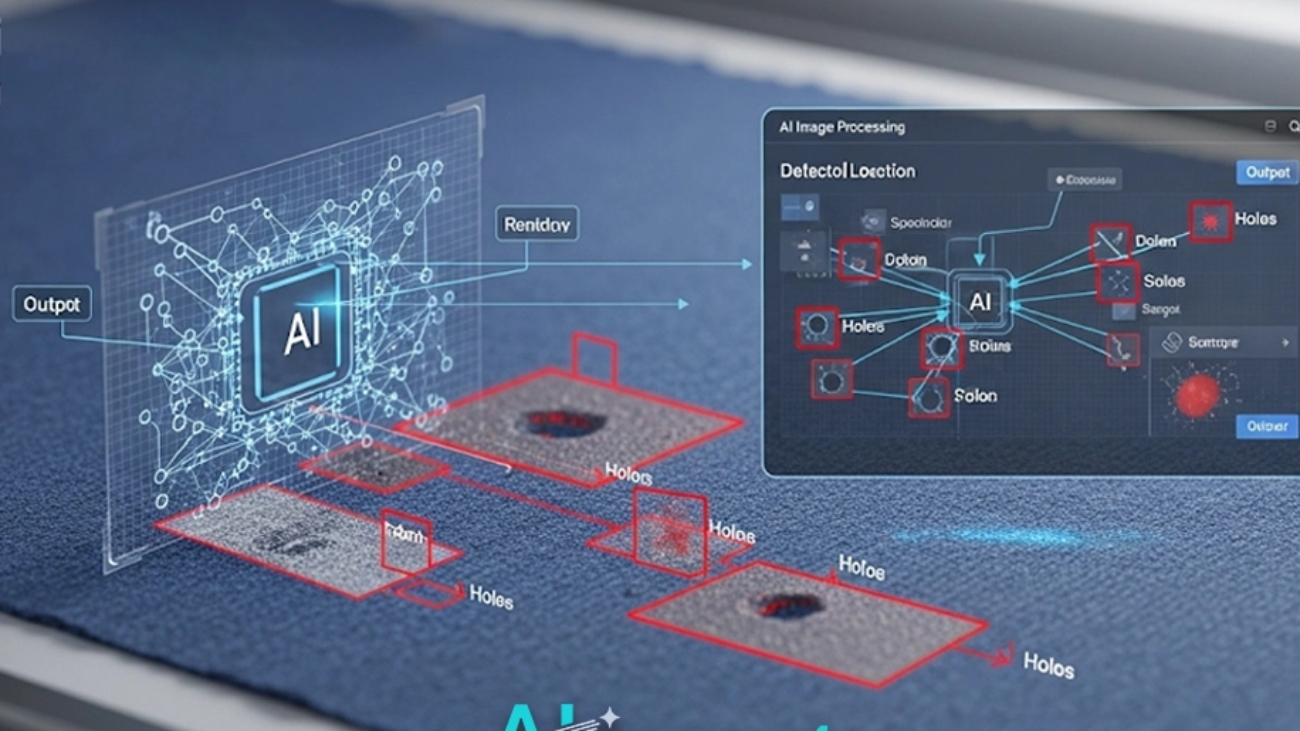

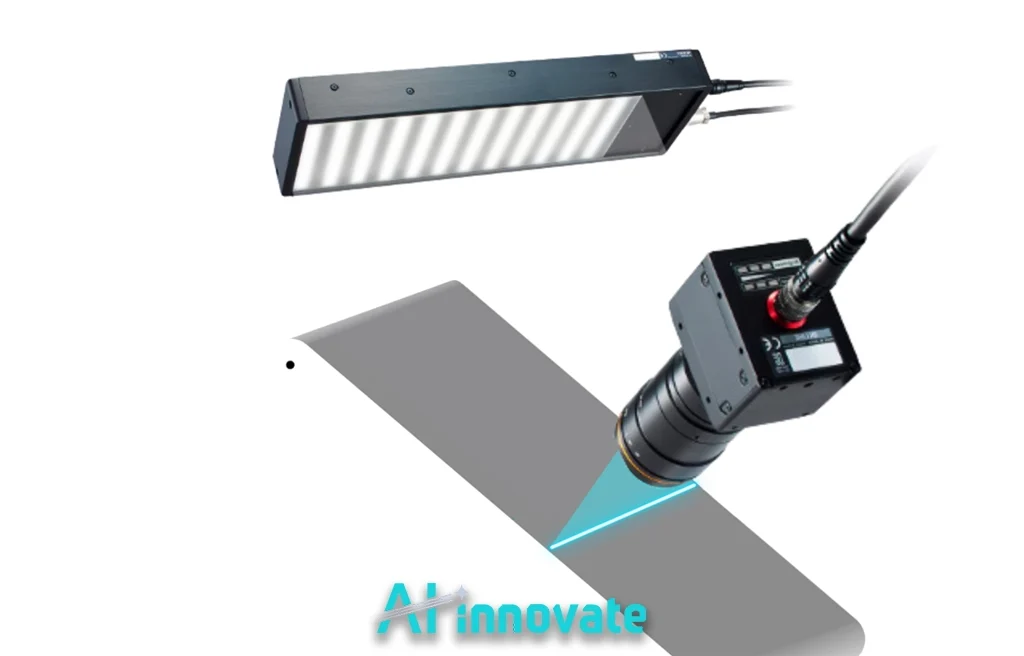

Visual Data from Vision Systems

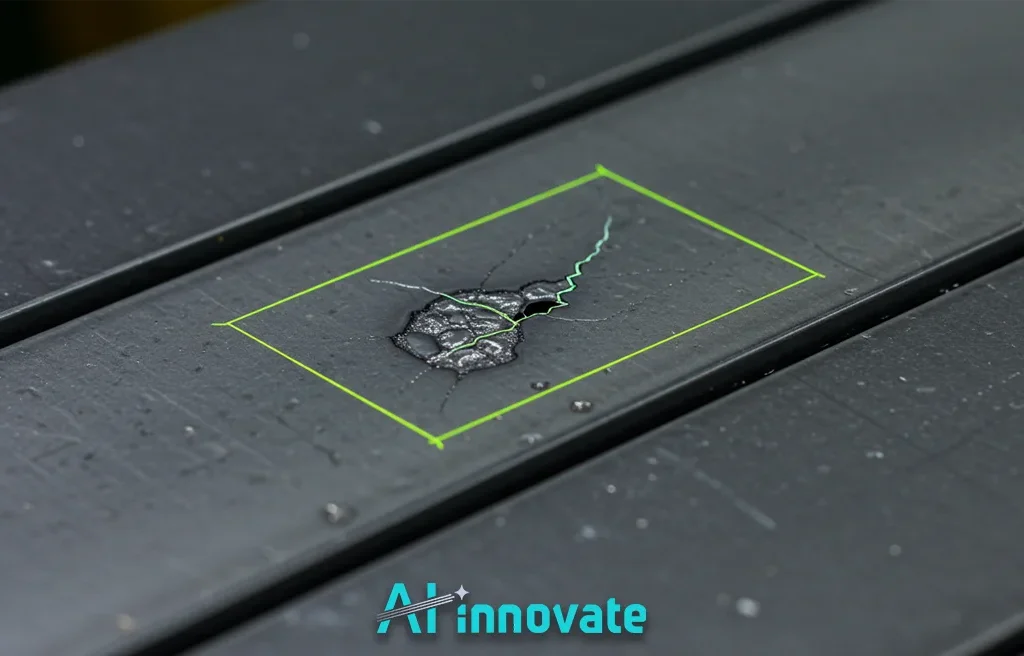

Image and video feeds from cameras on the production line are invaluable for quality control. They serve as the raw input for AI models designed to identify surface defects, assembly errors, or packaging inconsistencies that are often invisible to other sensors.

Contextual Operational Data

Data from Manufacturing Execution Systems (MES) or ERPs, such as batch IDs, raw material sources, or operator shift schedules, provides crucial context. Correlating sensor or visual data with this contextual information allows the system to identify root causes, not just symptoms.

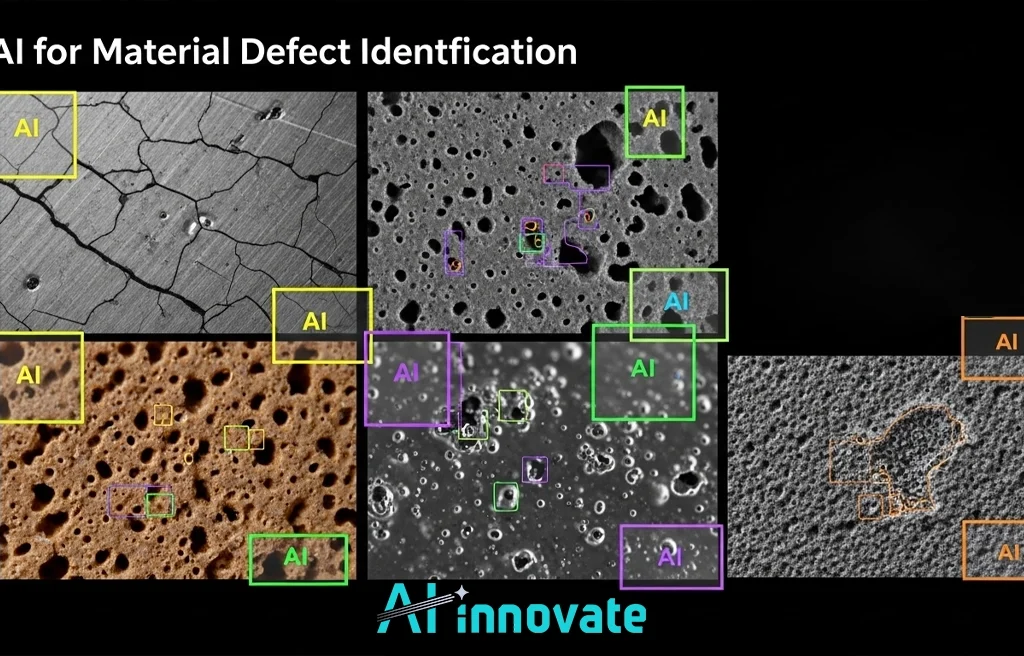

Sector-Specific Anomaly Signatures

The true power of modern anomaly detection lies in its adaptability to the unique material properties and process signatures of diverse industries. The definition of an “anomaly” is not universal; it is highly contextual. An insignificant blemish on a construction material could be a critical, multi-million dollar failure on a semiconductor. Therefore, advanced systems are tuned to identify specific types of flaws across different sectors, including:

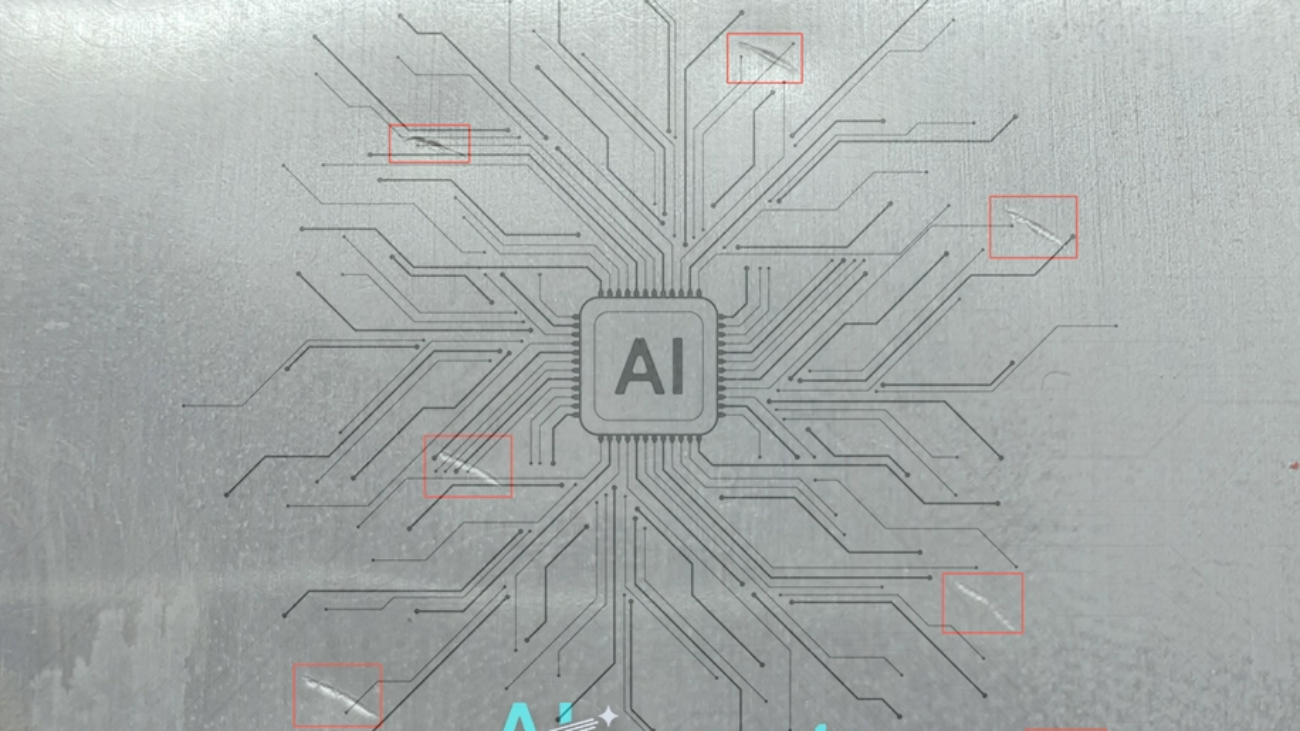

Advanced Metal and Alloy Inspection

In industries like aerospace and automotive, systems are trained to detect not only visible surface scratches or cracks but also subtle subsurface inconsistencies and micro-fractures in forged or cast metal parts by analyzing thermal imaging or acoustic sensor data.

Textile and Non-Woven Fabric Analysis

For textiles, automated visual systems identify nuanced defects that are difficult for the human eye to catch consistently during high-speed production. This includes detecting subtle color inconsistencies from dyeing processes, dropped stitches, snags, or variations in yarn thickness that affect the final product’s integrity.

Read Also: Fabric Defect Detection Using Image Processing

Semiconductor and Electronics Manufacturing

In this ultra-high-precision field, anomaly detection operates on a microscopic level. Vision systems are critical for inspecting silicon wafers, identifying minute defects in photolithography patterns or foreign particle contamination that could render an entire microchip useless.

Key Operational Applications

Ultimately, the value of these methodologies is measured by their real-world impact on the factory floor. Implementing Anomaly Detection in Manufacturing is not an academic exercise; it is a strategic tool with direct applications that yield measurable returns for Operations and QA Managers. The primary value-generating applications are:

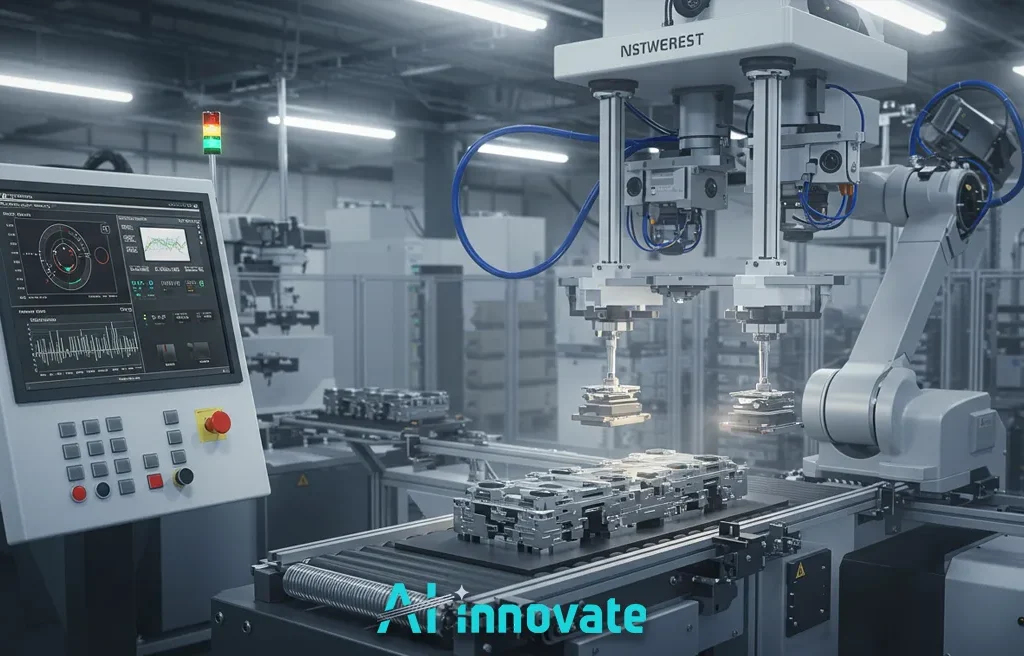

Predictive Maintenance

By analyzing data from IoT sensors on machinery, these systems can identify the faint signatures of impending equipment failure long before a catastrophic breakdown occurs. This allows maintenance to be scheduled proactively, drastically reducing unplanned downtime—the single largest source of lost revenue for many manufacturers.

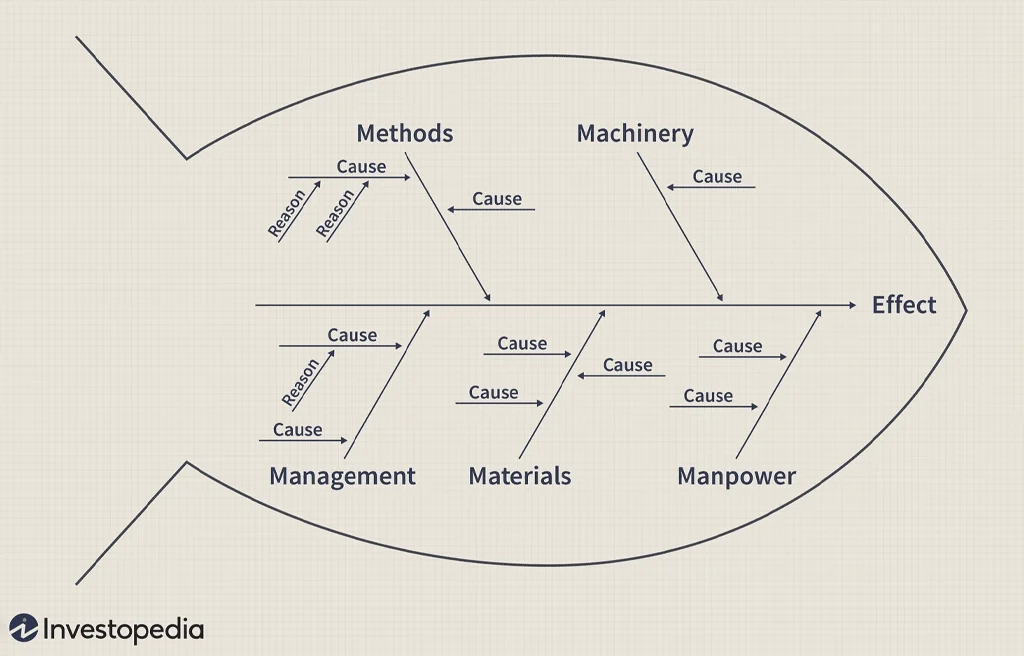

Process Optimization

Anomalies are not always related to broken equipment; they can also signal process inefficiencies. Identifying subtle deviations in parameters like temperature, flow rate, or material consistency helps engineers pinpoint bottlenecks and suboptimal configurations, enabling continuous improvement and higher overall equipment effectiveness (OEE).

Practical Integration Challenges

To build trust with technical experts, it’s essential to acknowledge that implementing these advanced systems is not without its hurdles. A successful deployment requires navigating several practical challenges. One of the most common issues is imbalanced data, where anomaly examples are exceedingly rare compared to normal operational data, making it difficult for some models to learn effectively.

Furthermore, industrial data from sensors is often noisy and requires sophisticated pre-processing to be useful. Perhaps the most significant challenge is the integration with legacy factory systems. Ensuring that a new AI solution can communicate seamlessly with existing Manufacturing Execution Systems (MES) and SCADA infrastructure is critical for creating a truly unified and intelligent operation.

Automated Visual Quality Control

Nowhere are the limitations of manual processes more apparent than in visual quality control. Human inspection is inherently subjective, prone to fatigue, and simply cannot scale to meet the demands of high-speed production.

This leads to missed defects, unnecessary waste, and inconsistent product quality, directly impacting a company’s reputation and bottom line. A robust system for Anomaly Detection in Manufacturing is the definitive solution to this long-standing industrial problem.

The goal is to move beyond human limitations with a system that is consistent, tireless, and precise. To meet this critical need, we developed a specialized solution:

A Solution for Modern Manufacturing

AI2Eye is an advanced quality control system designed specifically to automate and perfect automated visual inspection. Leveraging machine vision and AI, it operates in real-time on the production line, identifying surface defects, imperfections, and process inefficiencies with a level of accuracy that a human inspector cannot achieve.

By catching flaws early, AI2Eye drastically reduces scrap, streamlines production, and guarantees a higher, more consistent standard of quality, giving manufacturers a decisive competitive edge.

Read Also: AI-Driven Quality Control – Transforming QC With AI

Streamlining Vision System Prototyping

For the Machine Learning Engineers and R&D Specialists tasked with building these next-generation vision systems, a different set of challenges emerges. The development and prototyping lifecycle is often slowed by a critical dependency on physical hardware.

Sourcing, setting up, and reconfiguring expensive industrial cameras for different testing scenarios consumes valuable time and budget, creating project delays and limiting the scope of innovation. To remove this bottleneck, a new category of development tool is required. We offer a tool designed to address this pain point directly:

Accelerating Innovation with AI2Cam

AI2Cam is a powerful camera emulator that decouples vision system development from physical hardware. It allows engineers to simulate a wide range of industrial cameras and imaging conditions directly from their computers. The key benefits are transformative:

- Faster Prototyping: Test software and model ideas in a fraction of the time.

- Cost Reduction: Eliminate the need for purchasing and maintaining expensive test cameras.

- Increased Flexibility: Simulate countless scenarios that would be impractical to set up physically.

- Remote Collaboration: Enable teams to work together seamlessly from any location.

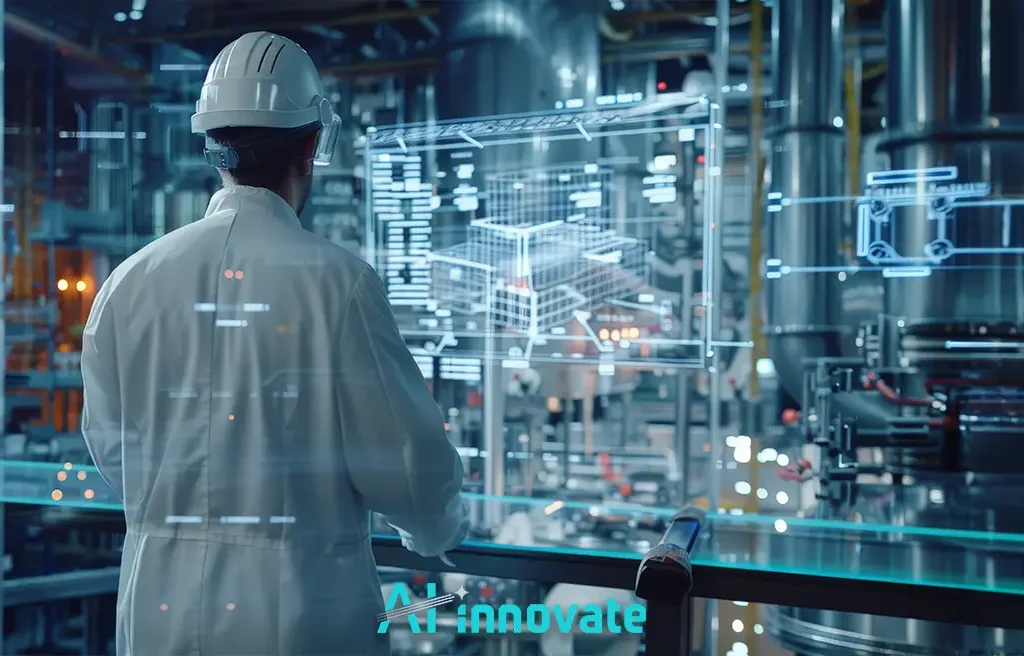

System-Wide Anomaly Intelligence

The ultimate goal extends beyond identifying individual faults. The future lies in creating system-wide anomaly intelligence, where data from every corner of the factory—from production lines and supply chains to energy consumption and environmental controls—is aggregated and analyzed holistically.

This integrated approach transforms Anomaly Detection in Manufacturing from a localized tool into a centralized intelligence hub. It provides a comprehensive, real-time understanding of the entire operational health of the enterprise, enabling leaders to make smarter, data-driven decisions at a strategic level and fostering a culture of true continuous improvement. This is the foundation of the genuinely smart factory.

Read Also: Smart Factory Solutions – Practical AI for Modern Industry

Conclusion

Moving from traditional monitoring to intelligent anomaly detection is a defining step for any modern manufacturer. As we have explored, this involves understanding the nature of industrial anomalies, selecting the right detection methodologies, and applying them to solve high-value problems like quality control and predictive maintenance. This strategic adoption is essential for reducing waste, boosting efficiency, and securing a competitive advantage. Companies like AI-Innovate are at the forefront, providing the practical, powerful tools necessary to turn this vision into reality.